Event Driven Architecture in Azure using EventHub

How EDA helps us to improve stability and maintainability in development of application

Brief Introduction

An Event-Driven Architecture (EDA) helps us to separate the logic and code complexity. It mainly consists of producer and consumer, where the producer produce event then the consumer consumes the event.

In simpler terms, An event-driven architecture incorporates events into its design so that its code responds to the event and carries out predetermined tasks when they occur.

Importance of Event-Driven Architecture

In simple terms, we can say

Performance

Decoupling of code

Scalability

Asynchronous Programming

Cost Effective

We can use it with microservice architecture.

Productivity Increases, Since we are decoupling monolithic architecture, so we can engage more developers for developing individual features. Decoupling allows for a faster development cycle.

Reduces API response time.

Handles Faulty Scenarios, like if one service is down the broker has storage capacity, so it retriggers that event exponentially for a particular period.

The Main advantage of EDA Architecture is we can decouple complex architecture into simpler ones. Another aspect is performance if we need need to implement an email service which sends email when the user onboard on an eCommerce site. So the API sending an email when the user onboard takes more than 500ms Response time, so we can decouple email service from API. The API sends event data to the queue service, and then the queue service process that data and send an email to the user. So API can reduce the response time. Now the API has the task to store the data in the database and send an event to the queue service. It's like an asynchronous task running parallelly, it improves the user experience, so the user does have not to wait for the irrelevant task to run like an email service task, this task should be run in the background or it should decouple from the main service otherwise it like a blocking code.

Examples:

Azure Queue Storage

Think of a situation like an API that converts a high-quality image to a compressed image and then it stores in a cloud storage solution. So API response time should take more than 30sec. If your using Event-Driven Architecture, Suppose you have a queue system that works on the principle of First In First Out(FIFO). Then data enters into this queue system, so it processes the queue in the manner of FIFO. So the API task is to push the incoming payload to cloud storage after that it pushes an event to Queue System, then the queue system Fetches that image and compresses it, and then it stores it to cloud storage.

Azure Event Hub

Here In this scenario, From a website leads are generated frequently, so API receives data from the website, So we need to process that data and insert it into the database and at the same time, we need to send them a welcome email. So here API is a producer which writes events into eventhub topic. Here Two consumers are simentenously listening to the event one for the insertion of data into the database and another one is sending an email. In simple words we can say two consumers are two features, they can independently standby, and none of the consumers is dependent on the other. From this, we can show using EDA we can decouple features.

What is Azure EventHub?

Azure Event Hubs is a big data streaming platform and event ingestion service. It can receive and process millions of events per second. Data sent to an event hub can be transformed and stored by using any real-time analytics provider or batching/storage adapters.

The main Features are:

It supports MQP, HTTPS, and Apache Kafka protocol

Scalable

Secure

How do we develop EDA using the event hub?

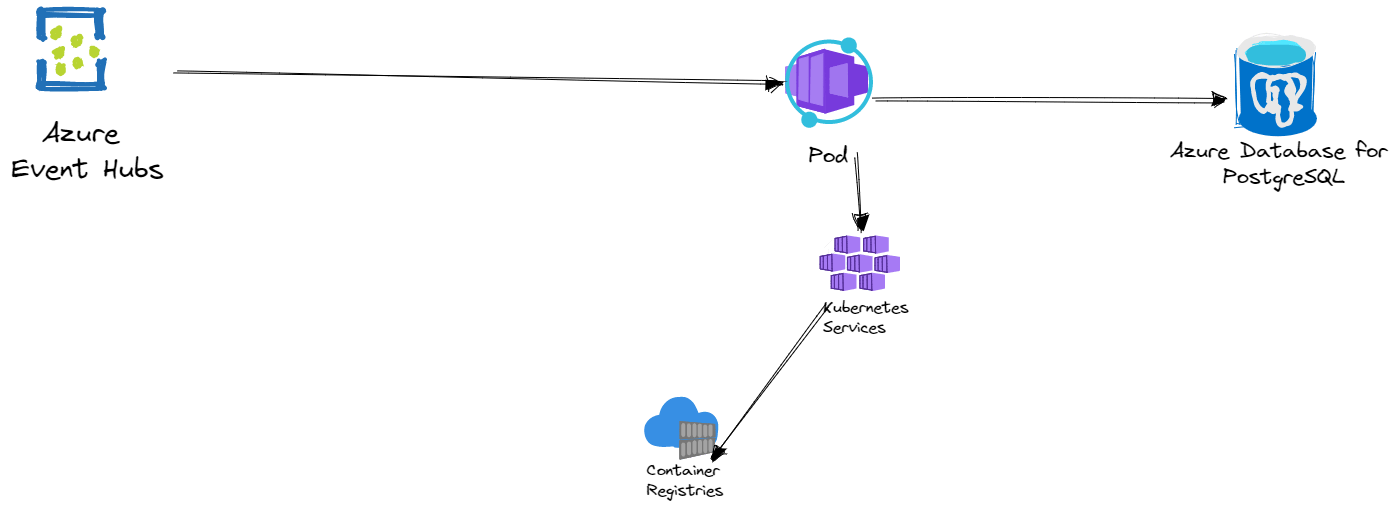

We have a Banking WebSocket that sends data in real-time. So it sends out plenty of data every sec. So the main task was to insert it into the database when the event occurs. We don't prefer to make complex code like monolithic architecture. So we used the event hub as our messaging broker. For this implementation, we separated our repository into two, one for the producer and another one for the consumer part. The Producer repo contains the connection to the WebSocket and data from WebSocket is pushed to the event hub topic. And the consumer part will receive events from the producer and insert them into the database.

Another challenge we have faced was no of events. we have around 36 events from the WebSocket that we need to handle in the consumer part. So in our python code, we implemented a config file that contains the event name and database function. To execute the database operation, we used to filter the config file on the event name. This help to reduce the code complexity drastically.

event_list = [

{

"event_name": "******",

"function_name": ["*******"]

}

]

async def execute(event):

# event from eventhub producer

eventhub_topic_message =event

filtered_event = list(filter(lambda event: event.get("event_name") in event_name , event_config))

if filtered_event:

# perform tasks

db_connect.execute("filtered_event[0]['function_name")

Scalability is naturally achieved in this pattern through highly independent and decoupled event processors. Each event processor can be scaled separately, allowing for fine-grained scalability.